Projects

Helen Huang’s personal, research, and course projects.

Personal Projects

Passion projects I made because I thought it would be cool :)

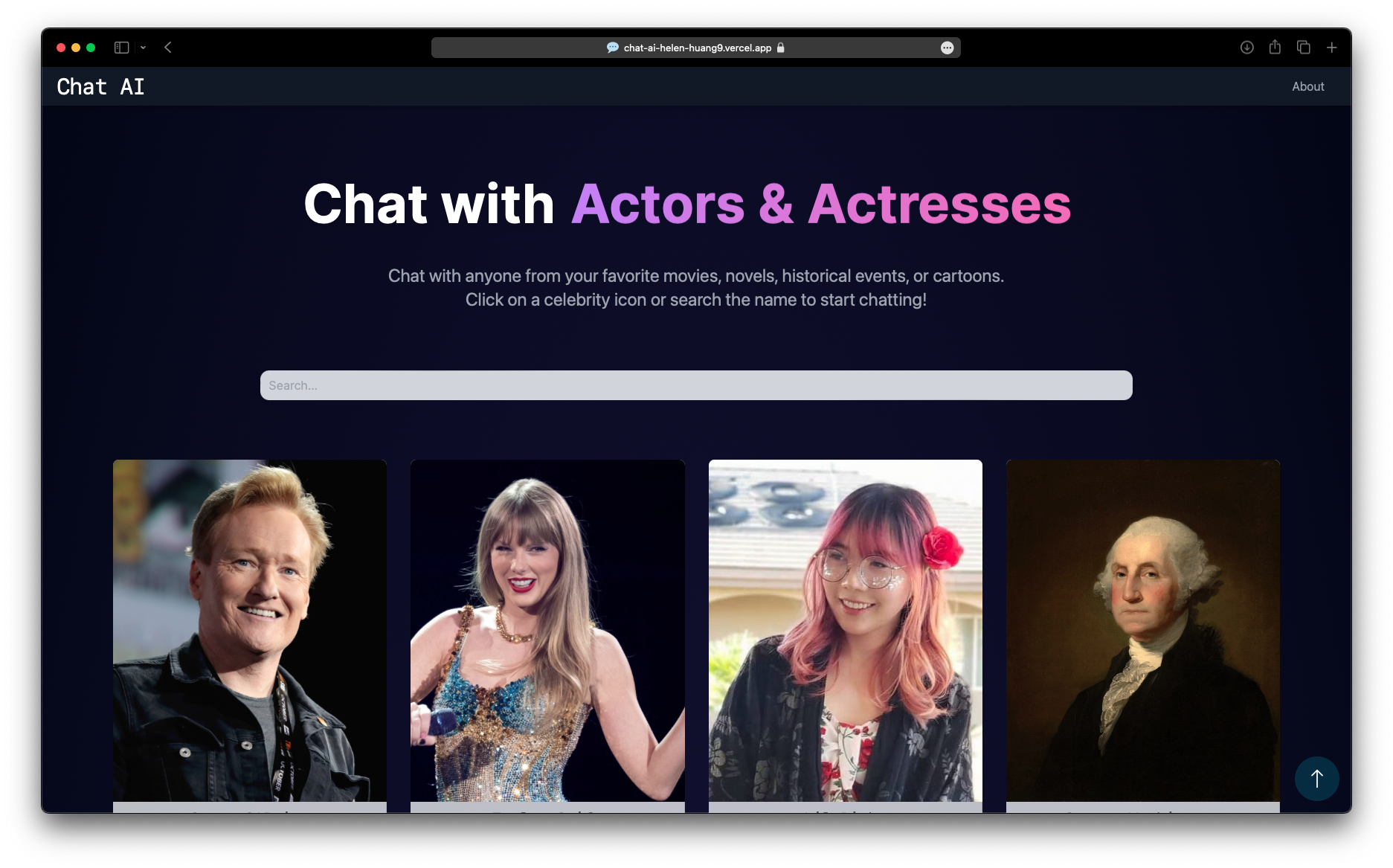

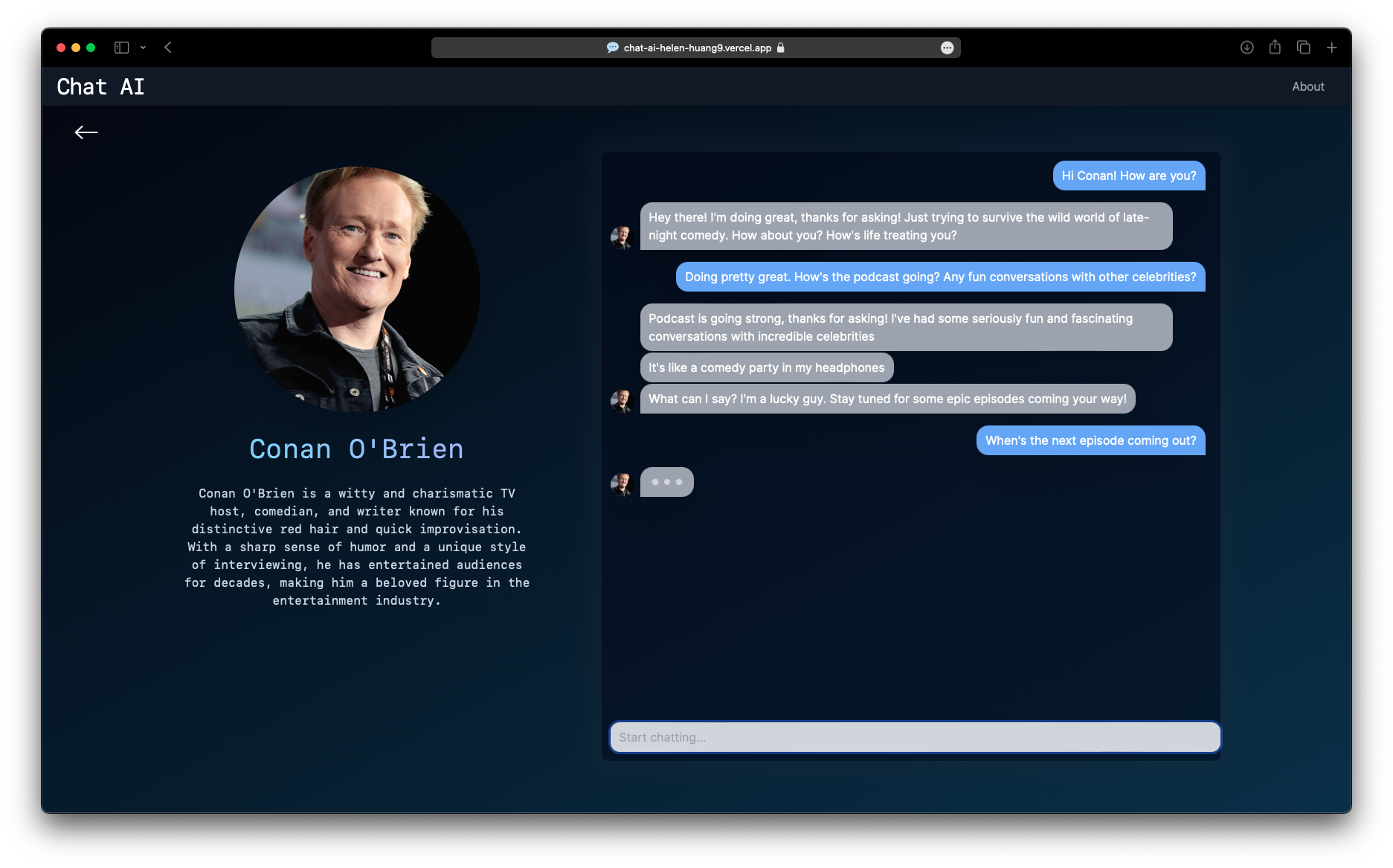

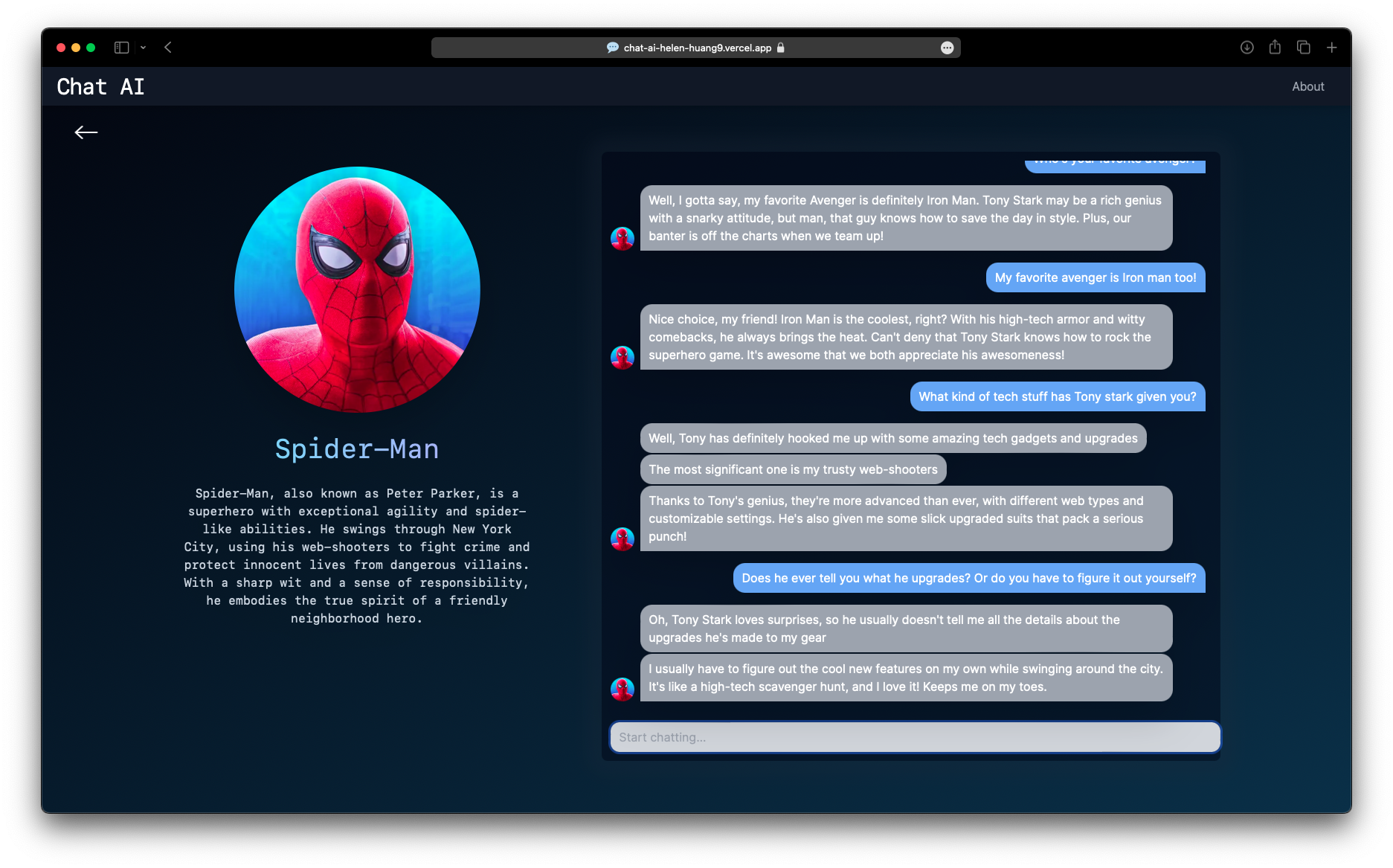

ChatAI Website

ChatAI is a full-stack website I designed and developed. Users can chat with AI celebrities, famous movie/book characters, and even inanimate objects!

I used React, Next.js 13, and Tailwind CSS for the front-end, and MongoDB and ChatGPT’s Chat Completions API for the back-end.

Check out the website here: chat-ai-helen-huang9.vercel.app

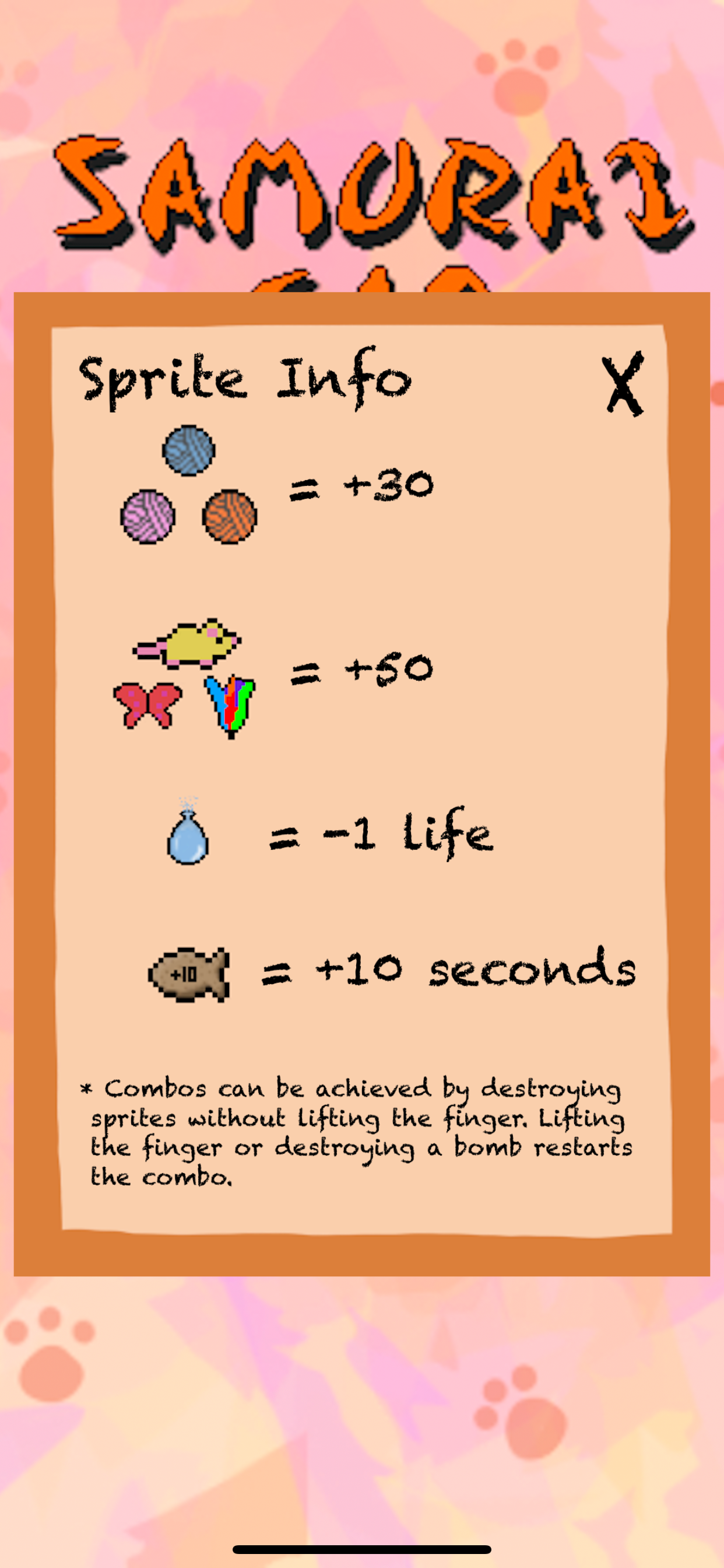

Cat Ninja iOS Game

Cat Ninja is an iOS game created in Swift using SwiftUI and SpriteKit. As a cat ninja, swipe cat toys and avoid water balloons. Score points and gain combos before the time runs out! [Github]

Artwork created by Helen Huang using Procreate.

Research

Research work under Prof. Sridhar in the Brown Interactive 3D Vision and Learning Lab.

NeRF Models

The following are some of the NeRF videos I helped produce. These videos are 3D continuous scenes learned from a sparse set of images using a deep neural network. The object models and camera data was created and sampled in Blender.

Course Projects

Cool final projects I made in my CS courses at Brown.

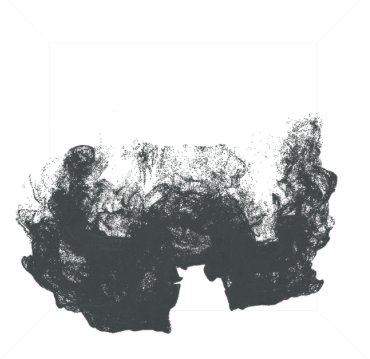

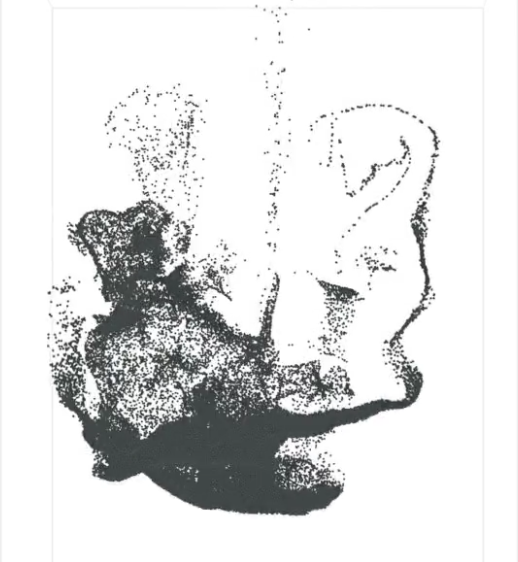

Ink Simulation

I created a physics-based ink simulation of ink diffusing in water for my CSCI 2240 Advanced Computer Graphics project with Austin Miles, Mandy He, and Tianran Zhang. This project was based off of the two papers, “Interactive Visual Simulation of Dynamic Ink Diffusion Effects” and “Fluid Flow for the Rest of Us.”

The water is simulated as a velocity grid which is updated per timestep using the Navier-Stokes equations. After the water is updated, the ink particles are then convected through the velocity grid. Each timestep is saved as a .ply file containing the positions of all the ink particles which were then rendered sequentially in Blender. Implemented in C++ and rendered in Blender. [Github].

I independently created a real-time version of this project in Swift using Metal. [Github].

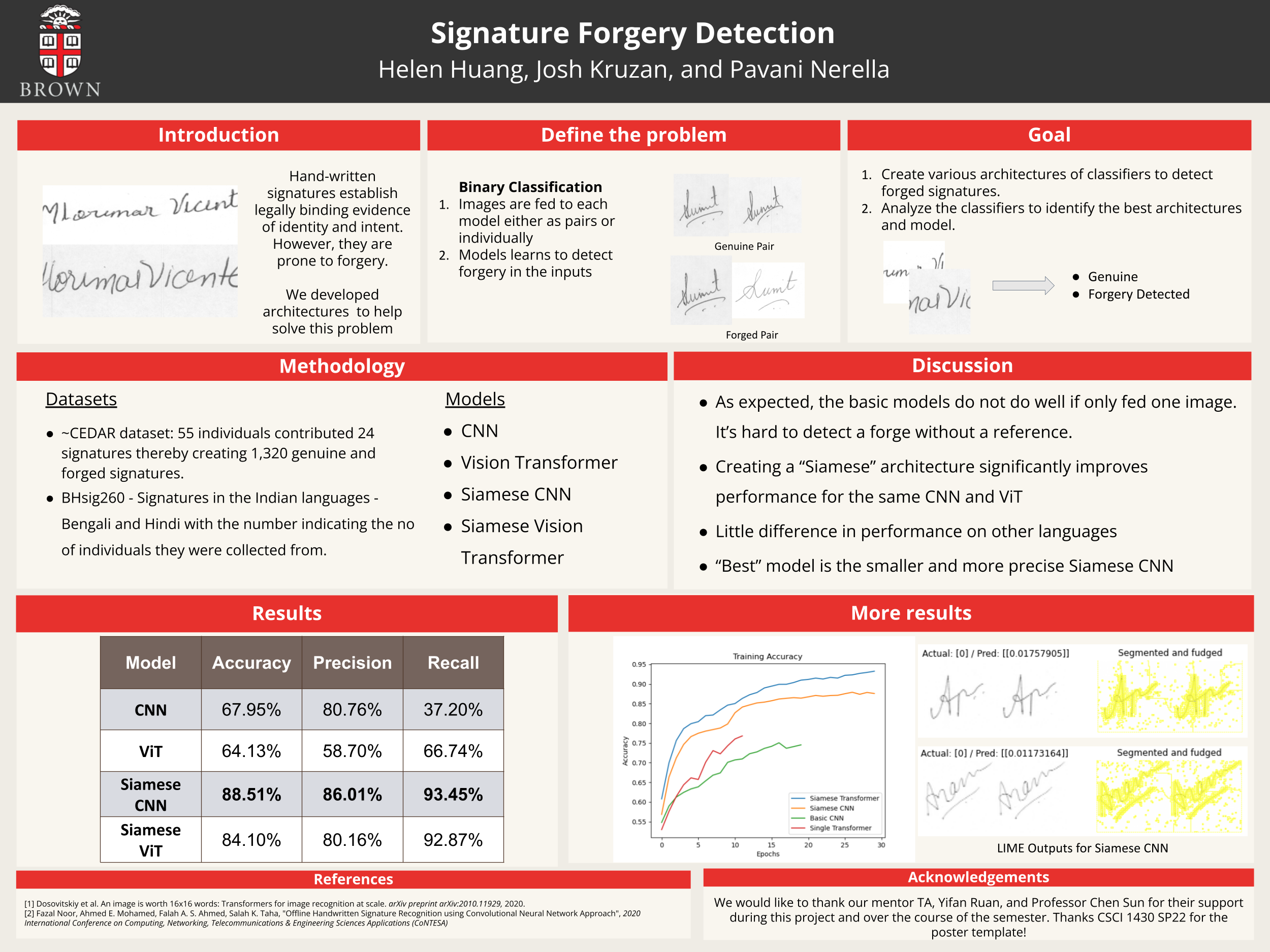

Signature Forgery Detector

I created a signature forgery detector for my CSCI 1470 Deep Learning final project with Josh Kruzan and Pavani Neralla.

We implemented several different models to test their accuracies, including a CNN, Vision Transformer, Siamese CNN, and Siamese Vision Transformer. We further examined what features of the signatures that the models identified were important in classification by running LIME (Local Interpretable Model-Agnostic Explanations) using our models and datasets. Implemented in Python using Tensorflow. [Github]

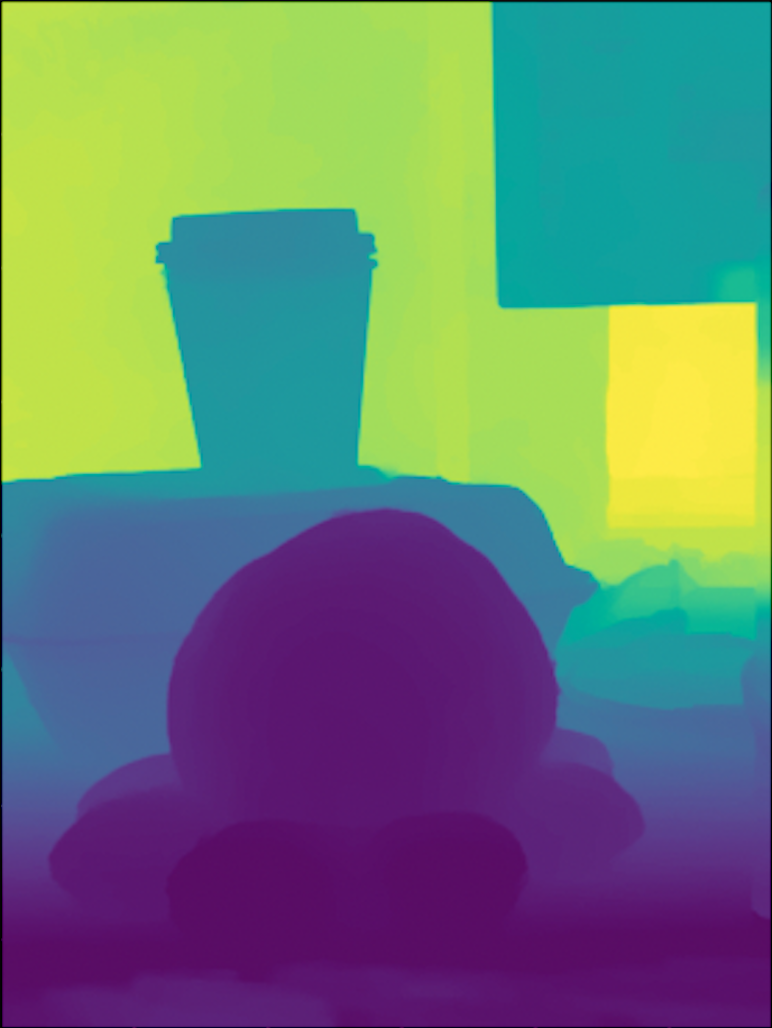

Depth Capture System

I created a depth capture system as part of my CSCI 1430 Computer Vision final project with Kate Nelson, Angela Xing, and Lo McKeown. I developed an app that capture RGB images and its corresponding depth data, then visualize scene as a 3D voxel model. Implemented in Swift and Python. [Github]

OpenGL Underwater Scene

I created an underwater scene for my CSCI 1230 Computer Graphics final project with Kate Nelson and Angela Xing. We implemented randomly generated terrain, bezier curve camera movement, and L-system coral plants using C++ and OpenGL.

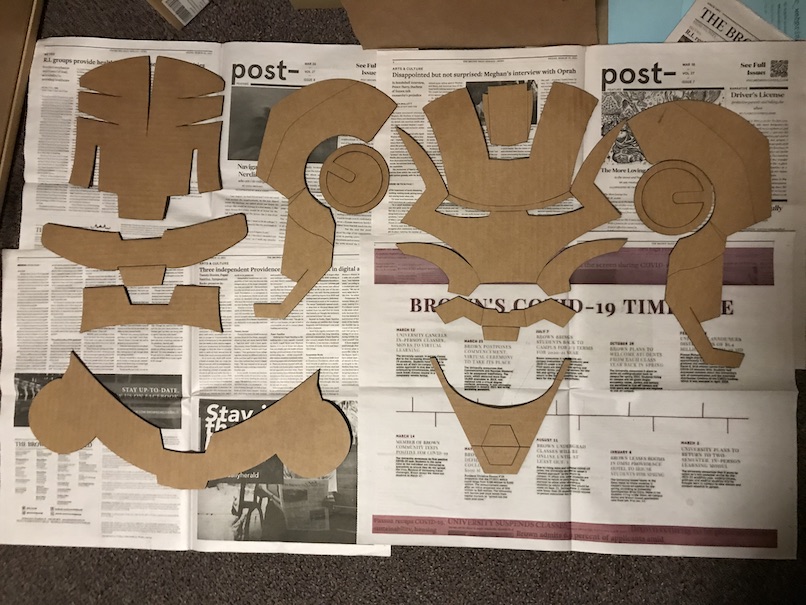

Iron Man Helmet

I created a functioning Iron Man helmet for my ENGN 0032 Intro to Engineering: Design final project with Kevin Hsu and Angela Xing.

“Field” - Instrumental Song

I wrote, composed, and produced a song for my MUSC 0400B Intro to Popular Music Theory final project.